【英文原版】StableDiffusion3技术报告

AI智能总结

AI智能总结

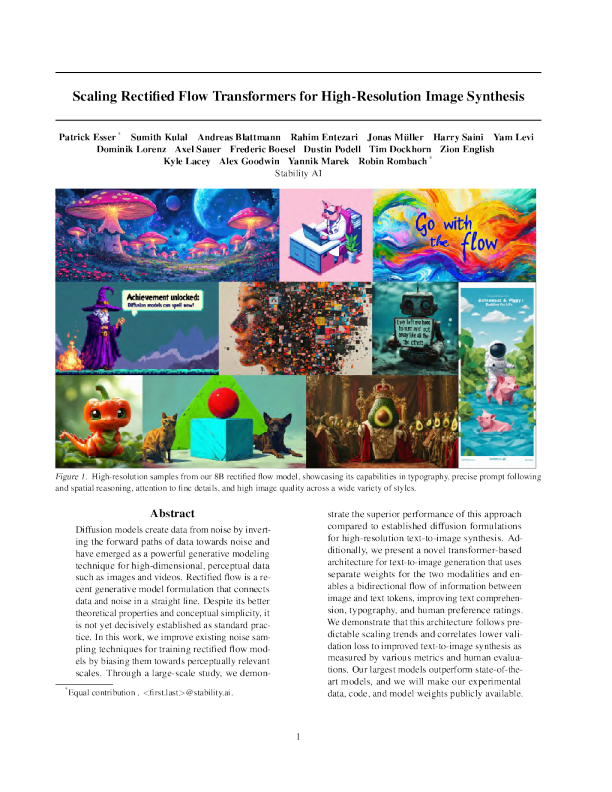

ScalingRectifiedFlowTransformersforHigh-ResolutionImageSynthesis PatrickEsser*SumithKulalAndreasBlattmannRahimEntezariJonasMu¨llerHarrySainiYamLeviDominikLorenzAxelSauerFredericBoeselDustinPodellTimDockhornZionEnglish KyleLaceyAlexGoodwinYannikMarekRobinRombach* StabilityAI Figure1.High-resolutionsamplesfromour8Brectifiedflowmodel,showcasingitscapabilitiesintypography,precisepromptfollowingandspatialreasoning,attentiontofinedetails,andhighimagequalityacrossawidevarietyofstyles. Abstract Diffusionmodelscreatedatafromnoisebyinvert-ingtheforwardpathsofdatatowardsnoiseandhaveemergedasapowerfulgenerativemodelingtechniqueforhigh-dimensional,perceptualdatasuchasimagesandvideos.Rectifiedflowisare-centgenerativemodelformulationthatconnectsdataandnoiseinastraightline.Despiteitsbettertheoreticalpropertiesandconceptualsimplicity,itisnotyetdecisivelyestablishedasstandardprac-tice.Inthiswork,weimproveexistingnoisesam-plingtechniquesfortrainingrectifiedflowmod-elsbybiasingthemtowardsperceptuallyrelevantscales.Throughalarge-scalestudy,wedemon- *Equalcontribution.<first.last>@stability.ai. stratethesuperiorperformanceofthisapproachcomparedtoestablisheddiffusionformulationsforhigh-resolutiontext-to-imagesynthesis.Ad-ditionally,wepresentanoveltransformer-basedarchitecturefortext-to-imagegenerationthatusesseparateweightsforthetwomodalitiesanden-ablesabidirectionalflowofinformationbetweenimageandtexttokens,improvingtextcomprehen-sion,typography,andhumanpreferenceratings.Wedemonstratethatthisarchitecturefollowspre-dictablescalingtrendsandcorrelateslowervali-dationlosstoimprovedtext-to-imagesynthesisasmeasuredbyvariousmetricsandhumanevalua-tions.Ourlargestmodelsoutperformstate-of-the-artmodels,andwewillmakeourexperimentaldata,code,andmodelweightspubliclyavailable. 1.Introduction Diffusionmodelscreatedatafromnoise(Songetal.,2020).Theyaretrainedtoinvertforwardpathsofdatatowardsrandomnoiseand,thus,inconjunctionwithapproximationandgeneralizationpropertiesofneuralnetworks,canbeusedtogeneratenewdatapointsthatarenotpresentinthetrainingdatabutfollowthedistributionofthetrainingdata(Sohl-Dicksteinetal.,2015;Song&Ermon,2020).Thisgenerativemodelingtechniquehasproventobeveryeffectiveformodelinghigh-dimensional,perceptualdatasuchasimages(Hoetal.,2020).Inrecentyears,diffusionmodelshavebecomethede-factoapproachforgeneratinghigh-resolutionimagesandvideosfromnaturallanguageinputswithimpressivegeneralizationcapabilities(Sahariaetal.,2022b;Rameshetal.,2022;Rombachetal.,2022;Podelletal.,2023;Daietal.,2023;Esseretal.,2023;Blattmannetal.,2023b;Betkeretal.,2023;Blattmannetal.,2023a;Singeretal.,2022).Duetotheiriterativenatureandtheassociatedcomputationalcosts,aswellasthelongsamplingtimesduringinference,researchonformulationsformoreefficienttrainingand/orfastersamplingofthesemodelshasincreased(Karrasetal.,2023;Liuetal.,2022). Whilespecifyingaforwardpathfromdatatonoiseleadstoefficienttraining,italsoraisesthequestionofwhichpathtochoose.Thischoicecanhaveimportantimplicationsforsampling.Forexample,aforwardprocessthatfailstoremoveallnoisefromthedatacanleadtoadiscrepancyintrainingandtestdistributionandresultinartifactssuchasgrayimagesamples(Linetal.,2024).Importantly,thechoiceoftheforwardprocessalsoinfluencesthelearnedbackwardprocessand,thus,thesamplingefficiency.Whilecurvedpathsrequiremanyintegrationstepstosimulatetheprocess,astraightpathcouldbesimulatedwithasinglestepandislesspronetoerroraccumulation.Sinceeachstepcorrespondstoanevaluationoftheneuralnetwork,thishasadirectimpactonthesamplingspeed. Aparticularchoicefortheforwardpathisaso-calledRec-tifiedFlow(Liuetal.,2022;Albergo&Vanden-Eijnden,2022;Lipmanetal.,2023),whichconnectsdataandnoiseonastraightline.Althoughthismodelclasshasbettertheoreticalproperties,ithasnotyetbecomedecisivelyes-tablishedinpractice.Sofar,someadvantageshavebeenempiricallydemonstratedinsmallandmedium-sizedex-periments(Maetal.,2024),butthesearemostlylimitedtoclass-conditionalmodels.Inthiswork,wechangethisbyin-troducingare-weightingofthenoisescalesinrectifiedflowmodels,similartonoise-predictivediffusionmodels(Hoetal.,2020).Throughalarge-scalestudy,wecompareournewformulationtoexistingdiffusionformulationsanddemonstrateitsbenefits. Weshowthatthewidelyusedapproachfortext-to-imagesynthesis,whereafixedtextrepresentationisfeddirectly intothemodel(e.g.,viacross-attention(Vaswanietal.,2017;Rombachetal.,2022)),isnotideal,andpresentanewarchitecturethatincorporateslearnablestreamsforbothimageandtexttokens,whichenablesatwo-wayflowofinformationbetweenthem.Wecombinethiswithourimprovedrectifiedflowformulationandinvestigateitsscala-bility.Wedemonstrateapredictablescalingtrendintheval-idationlossandshowthatalowervalidationlosscorrelatesstronglywithimprovedautomaticandhumanevaluations. Ourlargestmodelsoutperformstate-of-theartopenmodelssuchasSDXL(Podelletal.,2023),SDXL-Turbo(Saueretal.,2023),Pixart-α(Chenetal.,2023),andclosed-sourcemodelssuchasDALL-E3(Betkeretal.,2023)bothinquantitativeevaluation(Ghoshetal.,2023)ofpromptun-derstandingandhumanpreferencera